The Nvidia Takeover in AI

- Tomás Woods

- Apr 16, 2024

- 4 min read

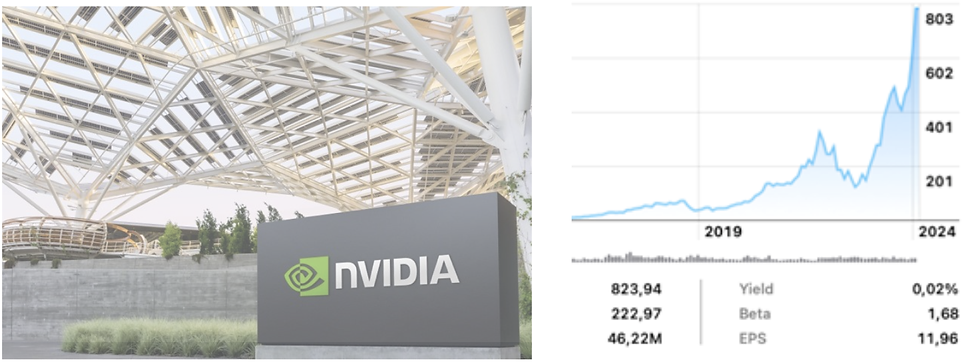

A McKinsey report conducted in June 2023 suggests that generative AI could add more than $4 trillion annually across 63 different industries, and this is no surprise. Neural networks are amongst the most computing intensive programs and require the most efficient and best performing GPU cores out there. In fact, GPUs have been called a rare Earth metal – even gold of the century - , and that Nvidia is taking the lead into manufacturing. The now 1.93 trillion-dollar company, is set to become America’s biggest, with its stock value having increased by 481% over the past 3 years being a good indicator of that. In this article, we will explore the benefits of AI to global economy, what GPUs are and how they function, and why Nvidia is being chosen by more than 40,000 AI businesses today.

AI models, like all other pieces of software, require the appropriate hardware components to operate at high speeds and efficiency, and while CPUs can handle computing tasks like running applications and the OS (operating system), the GPU has become of greater necessity for tasks like generative AI. Although in the past GPUs (graphic processing unit) have been used to run computer animations and graphics intensive programs, today, their job has been extended to far beyond those capabilities, reaching out for mathematically complex operations which the CPU (central processing unit) simply wasn’t designed to ravel. As such, although CPUs and GPUs are similar, in that they share same internal components such as cores, memory and control units, their purposes are fundamentally different. To develop on this field of computer hardware, we can look at the importance of such internal components, starting with cores. Cores are small sub-units of CPUs/GPUs responsible for running all computations and logical functions, which it does by extracting information from the computer’s memory in the form of bits. These bits (digital instruction signals) are then decoded and run past logical gates in a timeframe known as an instruction cycle. Next, there is the component of memory, which itself can be distinguished between two types: ROM (read only memory) and RAM (random access memory). While ROM is the slower-going memory, that takes up more physical space but that can hold greater volumes of data, RAM is a much faster memory whose aim is to keep information temporarily and in lower volumes. Then, there is a less spoken-of memory known as the “Cache” which built into every core, is even smaller than RAM and can run between 10 and 100 times faster. This cache memory, for which every CPU has a dedicated unit, is arranged by labels L1, L2, L3, going from the fastest to the slowest. Finally, another key memory component that serves every GPU and CPU core, is that of a memory management unit (MMU) which is used to coordinate the exchange of instruction cycles between the core, cache and RAM memory.

As these hardware components advance and expand to host ever-larger pieces of software, AI sets new standards and pushes these systems to a performance never seen before. Simply put, AI is a computing lasagna composed of linear algebra equations, for which each is responsible for indicating the likelihood of a certain piece of data to be related with another. Today’s AI networks, otherwise known as neural networks, use a technology called deep learning to be trained with large volumes of data that can be quantified in terms of parameters and that indicate how large an AI model is. For instance, today’s GPT-4 holds the record for largest model, with over 1.76 trillion parameters, against your home’s Alexa which has only around 20 billion. As the AI industry is gaining value and is used by hundreds of millions of users daily, the need for hardware supply in data centers has grown exponentially and companies like Nvidia have placed themselves in the lead.

Nvidia was founded in California in 1993, having specialized on the manufacturing of GPUs since then, but only having grown visibly in the past 5 years. The reason for this peak growth derives from the advantage of GPUs in the training, harnessing and processing of AI models which itself has grown in recent years, with a series of technical advantages such as parallel processing, scalability and energy efficiency. Parallel processing is an advantage since it supports accelerated computing which is a way to speed up work on demanding applications, from data analytics, simulations, visualizations, and of course, AI. Scalability is also a characteristic that sets GPUs apart when scaling them to supercomputing heights, for technical reasons, and finally, so does its energy efficiency capabilities which CPUs just weren’t designed to compete with. In fact, the search for high performance GPUs has given it the title of “gold of the decade”, having been explored and improved dramatically in a short time. According to a study performed for the U.S. government in 2020, after having accounted for both production and operating costs, AI chips are currently 1-3 orders of magnitude more cost-effective than leading-node CPUs. Another scientific opinion that confirms this status, is that companies like Nvidia has increased performance on AI inference by over 1,000x in the last 10 years, according to the company’s chief scientist Bill Dally. With no doubt, Nvidia’s GPUs has shown as the result of huge investment over decades of research and development that given to its AI optimized architecture, energy efficiency and low manufacturing costs, turned it into the market’s best. It is therefore no surprise that the company’s value surpassed both that of Amazon and Alphabet, only to be standing behind Microsoft and Apple in terms of market cap. Even so, analysists predict it could grow into the US’s biggest in a matter of a few years.

AI has proven to fuel industries, ranging from medical operations to urban planning, accurate simulations of substances in the petrochemical and carbon storage industries, and improving people’s lives with autonomous cars and personalized algorithms. It has also become evident, that thanks to hardware manufacturers like Nvidia, and the current advancement in GPU technologies, that we are just scratching its limits.

Source in MLA format:

“Economic Potential of Generative AI | McKinsey.” Www.mckinsey.com, 14 June 2023, www.mckinsey.com/capabilities/mckinsey-digital/our-insights/the-economic-potential-of-generative-ai-the-next-productivity-frontier#key-insights.

“GPU vs CPU - Difference between Processing Units - AWS.” Amazon Web Services, Inc., aws.amazon.com/compare/the-difference-between-gpus-cpus/#:~:text=The%20CPU%20handles%20all%20the.

Lutkevich, Ben. “What Is Cache Memory? Cache Memory in Computers, Explained.” SearchStorage, www.techtarget.com/searchstorage/definition/cache-memory#:~:text=Cache%20memory%20operates%20between%2010.

Mann, Alexander, and Saif M. Khan. AI Chips: What They Are and Why They Matter. Apr. 2020, cset.georgetown.edu/wp-content/uploads/AI-Chips—What-They-Are-and-Why-They-Matter.pdf.

Comments