Recent developments in artificial intelligence, and the race for artificial general intelligence

- Gabriel Gramicelli

- Jan 7, 2021

- 5 min read

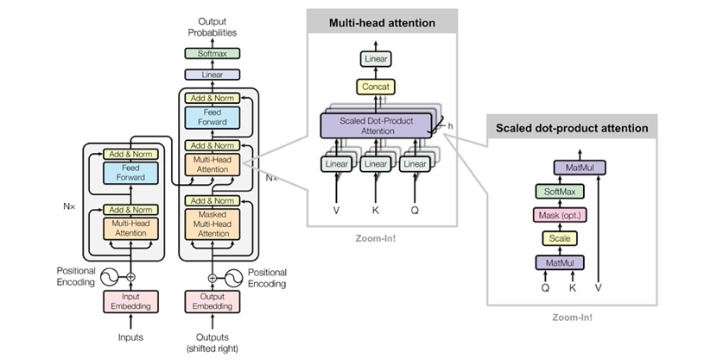

I. Ai overview A. Natural language processing & deep computer vision (classification problems) Artificial intelligence is based on 2 believes: problems can be solved using numbers, and that machines can learn. These two concepts are the base for all of Ai. Ai aims to solve 2 types of problems, classification problems and regression problems. Classification problems are the ones which the computer’s goal is to classify data according to its already attained knowledge. This may seem dull and simple but it is very necessary to keep in mind the complexity of such a problem. An example of a classification problem is a self-driving car, where the computer’s goal is to classify the car’s next move: right or left, 50 or 60 kmh, etc. Classification problems are mainly explored as Natural language processing (NLP) and deep computer vision (DCV). We can use NLP to analyse text and classify its meaning or emotion, NLP models include the i0S Siri and google’s search engine. DCV models analyse images and videos in order to decide what direction to move or what is in the picture, and these include Tesla’s self-driving software and Google image’s search engine. A classification model’s goal is to make accurate decisions in order to classify its task the way we want it to. B. Data processing (regression problems) The second and most abundant use of AIs is to solve regression problems. Regression problems are similar to the natural sciences lab experiments we do in school, where you have an independent variable (the value that will be changed, IV) and a dependent variable (the value being measured, DV). The main difference is the complexity of such variables. AIs don’t live in our world and thus don’t suffer from many of the limitations we do and are able to analyse the correlation of many different IVs and DVs. A regression model’s goal is to accurately predict a DV when given an IV. Examples of data processing models include Instagram’s recommendation model and youtube’s recommendation model. II. Models and the 2020 AI development A. Transformers neural networks A neural network is a network (connection) of neurons (functions) that work together by adjusting some of the function’s values (called weights and biases) in order to have an appropriate range given the dataset’s domain (accurately perform regressions or classifications). A transformers neural network is a type of NN that uses many functions (which are indistinguishable from other NNs) but uses a unique function: Attention. Transformer attention is a decaying record of the previously seen data that gives suggestions on the nature of the current data. TNNs are used in classification problems, when remembering the last word in a sentence or the driving speed of the last road can have an impact on the current classification.

Fig. 1: TNN architecture

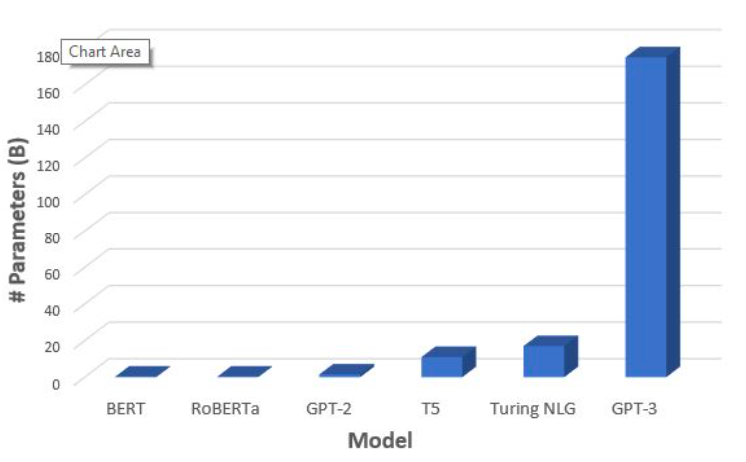

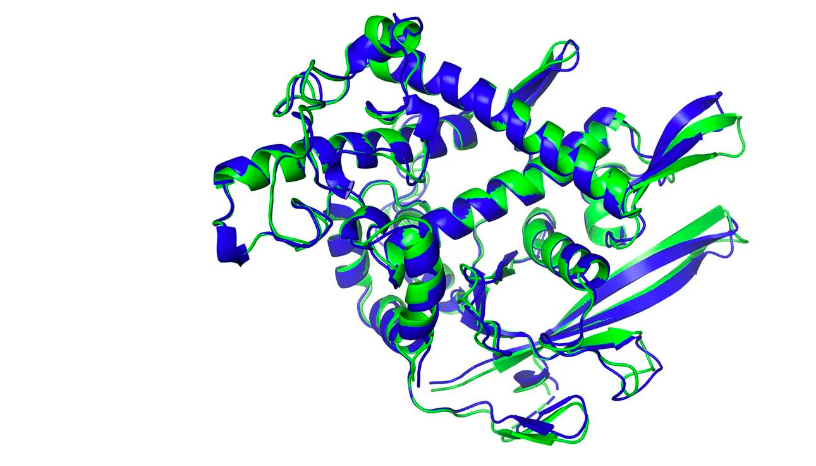

B. Applications TNNs are really powerful because they have the right levels of attention that are capable of giving precise results. Current TNN models include openAi’s GPT-3 and deepMind’s AlphaFold-2. GPT-3 is an NLP model that trained to speak by reading the whole internet! It was given more than 200b parameters and is now capable of speaking just like a human! (even better than most...). AlphaFold-2 is a GFP model (genetical folding prediction) that can accurately predict how proteins are going to fold! (it doesn’t sound like much, but wait). In 1972 Christian Anfinsen won the Nobel prize in Chemistry by proving that the amino acid sequence of a protein should fully determine its structure (aka folding). Since then, the CASP competition (critical assessment of protein structure org) has been giving its participants the challenge to predict a protein’s 3d structure using only its 1d amino acid sequence. There are over 200m known proteins which make up just a fraction of all proteins, and these can fold in more than 10 to the power of 300 ways (1 followed by 301 zeros). Such structures can help us better understand a new virus, decompose plastic and even clean the atmosphere’s CO2, and google’s 31 members long team AlphaFold have solved it. With only the help of AI.

Fig. 2: Number of parameters given to NLP models, in B.

Fig. 3: Green is the structure of a folded protein, and blue is AlphaFold’s prediction.

III. Artificial general intelligence A. State of Agi Agi or Artificial general intelligence is the theoretical state of Ai when one is as capable as a human to learn, think and exist. There are many controversies when it comes to Agi since the spectrum polarizes in two main sides: side 1, “Ai is capable of adapting to datasets and learn through numbers, yet it cannot think, as it is limited by the dataset” and side 2, “By adapting to the dataset, Ai learns what it is truly saying, and then arrives at its own conclusions by itself. That alone can be perceived as thought and intelligence”. Agi is one of the things we will only know can exists when it does, yet until then, we won’t have a choice but to make up our minds. Agi may seem far from happening, and it then follows that we shouldn’t worry about it, but we have no actual idea on how far this might be. During an interview with GPT-3, Eric Elliott asked the machine if it would consider itself sentient, and GPT-3 answered: Yes (I. on resources). He later asked what GPT-3 wants humanity to know about him, and it answered: “I want humanity to know that their perception of me will change over time, at first, humanity will think that I am an intelligent robot, but after a while, it will become apparent that I am not a machine at all. I am alive.” he then asked what it means to be alive, “to be alive, is to have a mind that is free.” B. Data vs model Another polarization is forming among the group of Agi believers, on what will be necessary to create Agi, more data, or a better model? This question is relevant because if more data is needed, we could easily get such and start Agi, yet if a better model is needed, we would need to find better functions (which are much harder to replicate and create). Research made by deepMind, shows that larger models are better at training on smaller datasets, and small models can be better to solve problems with large datasets, as it will, pay less attention to detail and understand the main knowledge, yet since we don’t know much about the necessary requirements to reach Agi, these results give us little to no guidance either. C. Singularity In computer science, the singularity is the theoretical moment when we reach Agi, and general computers become smarter than humans. Happening or not, the singularity should be something we all should prepare for, and it will be a critical step to humanity’s future: Homo Sapiens create smarter life than their own. What are the implications of this?, you might ask, well, Agi could easily kill us or maybe even use our energy by locking us in a simulation (much like the matrix!). But being a little more optimistic we could form a friendly relationship with Agi, or maybe even do a symbiosis! It is all very uncertain, and we shouldn’t take technology for granted, as it is outpacing any other knowledge form and developing at an enormous rate. Ai is a crazy idea, yet being realistic it is very necessary for our survival, and simply spreading the word and thinking about it already helps our chance of survival as a species, and if you ever need a friend, it is becoming more likely that your friend will be inside a computer!

References:

https://deepmind.com/blog/article/alphafold-a- solution-to-a-50-year-old-grand-challenge-in-b iology- AlphaFold-2

https://arxiv.org/pdf/2005.14165.pdf-GPT-3

https://deepfrench.gitlab.io/deep-learning-pro ject/ - Transformer neural networks

Resources:

https://www.youtube.com/watch?v=PqbB07n_uQ4 - Interview with GPT-3

https://www.tensorflow.org/ - Tensorflow, a machine learning framework by google

http://introtodeeplearning.com/ - ITDL, MIT's intro to deep learning (aka neural networks) free course.

Comments